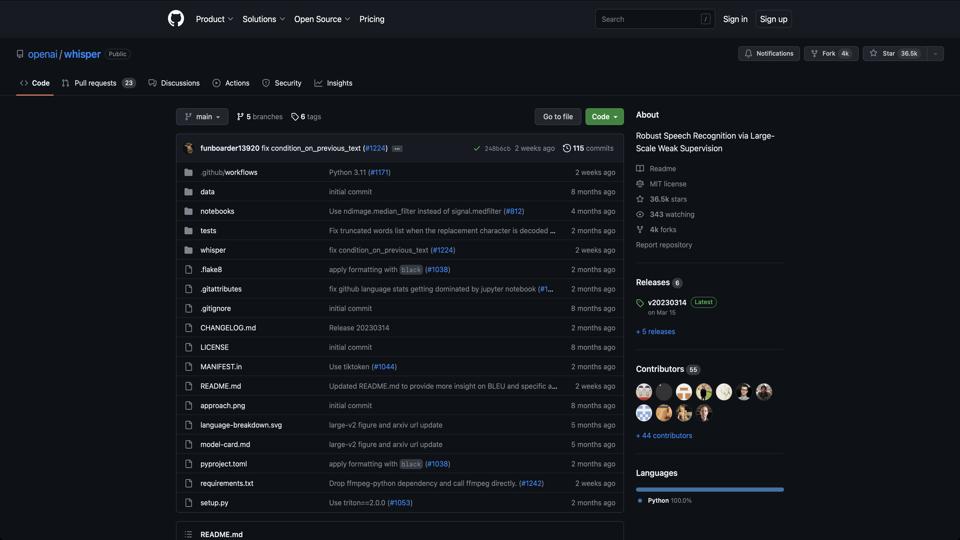

Whisper

Overview of OpenAI Whisper

OpenAI Whisper is an open-source automatic speech recognition (ASR) system developed by OpenAI. Released in 2022, it is designed to transcribe and translate speech in multiple languages with high accuracy. The tool is available on GitHub at https://github.com/openai/whisper and has gained popularity for its robustness in handling diverse audio inputs, including noisy environments and accents. Whisper is trained on a massive dataset of 680,000 hours of multilingual and multitask supervised data, making it versatile for tasks like transcription, translation, and voice activity detection.

Key Features

- Multilingual Support: Handles transcription and translation in nearly 100 languages, including English, Spanish, French, German, and many more.

- Model Variants: Comes in different sizes (tiny, base, small, medium, large) to balance between accuracy and computational requirements.

- Tasks Supported: Speech-to-text transcription, speech translation (e.g., from non-English to English), and language identification.

- Integration: Easy to use via Python API, with support for PyTorch and integration into applications like web apps or scripts.

- Open-Source: Licensed under MIT, allowing free use, modification, and distribution.

Installation and Usage

To get started with Whisper, you need Python 3.8+ and pip. Installation is straightforward:

- Install via pip:

pip install -U openai-whisper - Basic usage example:

import whisper model = whisper.load_model("base") result = model.transcribe("audio.mp3") print(result["text"]) - For better performance, install FFmpeg for audio processing and use a GPU if available (requires CUDA).

Whisper can process various audio formats like MP3, WAV, and M4A, and it supports real-time transcription with some optimizations.

Pros

- Exceptional accuracy, often outperforming commercial alternatives like Google Speech-to-Text in benchmarks.

- Handles noisy audio, accents, and technical jargon effectively.

- Free and open-source, with no API costs or rate limits.

- Active community on GitHub with over 50,000 stars and frequent contributions.

- Versatile for applications like subtitling videos, meeting transcriptions, or building voice assistants.

Cons

- Resource-Intensive: Larger models require significant GPU memory (e.g., large model needs ~10GB VRAM), making it challenging for low-end hardware.

- Slower inference on CPU; real-time transcription may require optimizations or smaller models.

- Limited to audio input; no direct support for video without extraction.

- Occasional hallucinations in transcription, especially with unclear audio.

- Dependency on external libraries like FFmpeg and PyTorch, which can complicate setup for beginners.

Pricing

Whisper is completely free as an open-source tool. However, if you use it via OpenAI’s API (a hosted version), it costs $0.006 per minute of audio processed. The GitHub version allows self-hosting without any fees.

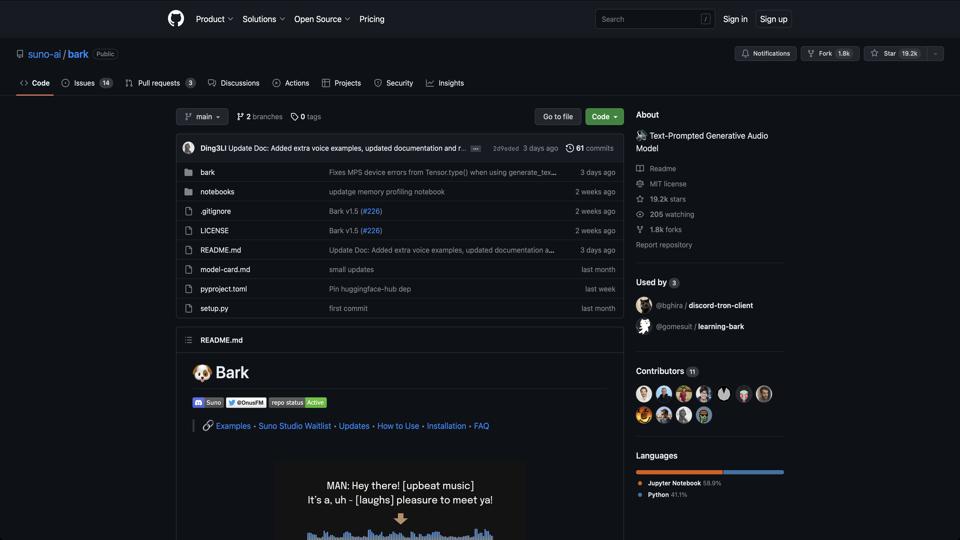

Alternatives

- Google Cloud Speech-to-Text: Commercial, with strong integration but higher costs.

- Mozilla DeepSpeech: Another open-source option, but less accurate and multilingual than Whisper.

- Amazon Transcribe: Cloud-based, suitable for enterprise use with scalability.

Final Verdict

Whisper is a top-tier choice for anyone needing reliable speech recognition without ongoing costs. It’s ideal for developers, researchers, and hobbyists, earning a 9/10 rating for its accuracy and versatility. If you have the hardware, it’s a must-try; otherwise, consider cloud alternatives for heavy workloads.