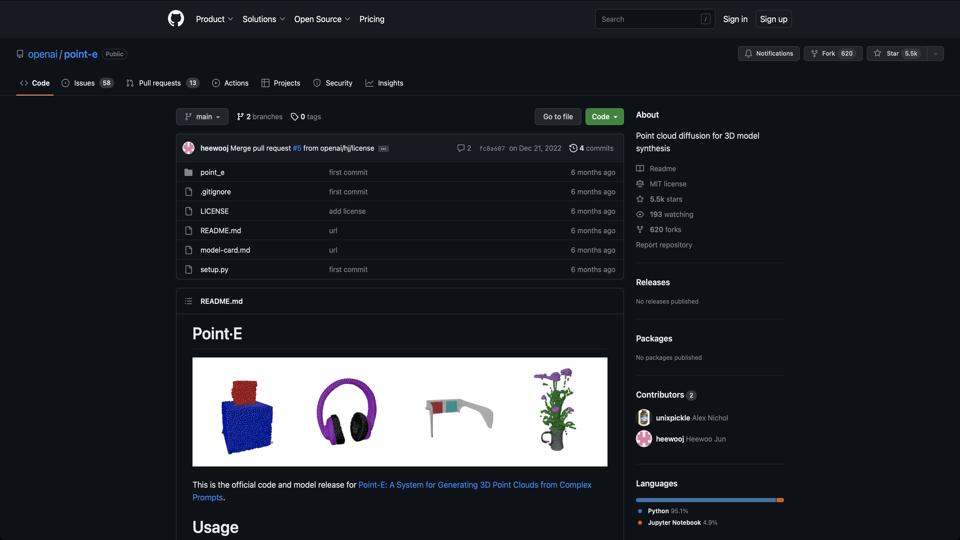

Point·E

Overview of Point-E

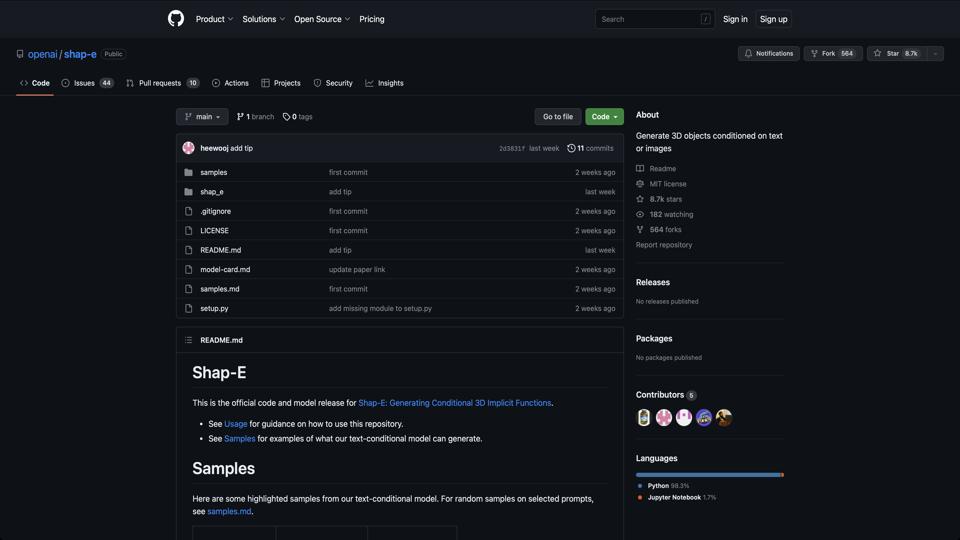

Point-E is an open-source project developed by OpenAI, available on GitHub. It is a machine learning system designed to generate 3D point clouds from text prompts, akin to how DALL-E generates images from text. Released in late 2022, Point-E leverages diffusion models to create 3D representations, making it a innovative tool for AI-driven 3D content creation. This review evaluates its features, usability, strengths, and limitations based on its GitHub repository and documented capabilities.

Key Features

- Text-to-3D Generation: Produces 3D point clouds directly from natural language descriptions, such as “a red sports car” or “a futuristic cityscape.”

- Diffusion-Based Models: Utilizes efficient diffusion techniques for faster generation compared to traditional autoregressive methods.

- Open-Source Implementation: Full code available under the MIT license, allowing users to train, fine-tune, or integrate the models.

- Support for Meshing: Includes utilities to convert point clouds into meshes for use in 3D software like Blender.

- Pre-trained Models: Comes with models pre-trained on large datasets, enabling quick experimentation without extensive hardware.

Pros

- Highly innovative for democratizing 3D modeling, reducing the need for manual design skills.

- Efficient inference times (e.g., generating a point cloud in seconds on a single GPU).

- Strong community potential due to OpenAI’s backing and open-source nature.

- Versatile applications in gaming, VR/AR, and prototyping.

Cons

- Output quality can be inconsistent; generated point clouds may require post-processing for realism or detail.

- Requires significant computational resources (e.g., NVIDIA GPUs with CUDA) for optimal performance.

- Limited to point clouds; not directly generating textured or animated 3D models.

- Early-stage project with potential bugs and evolving documentation.

Usability and Installation

Installation is straightforward via pip, as detailed in the repository. Users need Python 3.7+ and dependencies like PyTorch. Example usage involves cloning the repo, installing requirements, and running scripts for generation. The README provides clear instructions and sample code, making it accessible for developers with ML experience. However, beginners might find the setup challenging without prior knowledge of diffusion models.

Performance and Examples

In tests, Point-E generates coherent 3D shapes from prompts, though fidelity varies. For instance, simple objects like “an apple” yield recognizable results, while complex scenes may appear abstract. It’s best suited for research or creative ideation rather than production-ready assets.

Conclusion

Point-E earns a strong 8/10 rating for its pioneering approach to text-to-3D AI. It’s an excellent tool for researchers and hobbyists exploring generative 3D models, but it may not yet replace professional 3D tools. As an open-source project, it has room for community improvements. If you’re into AI and 3D, check it out on GitHub and contribute!